Hot Right Now

Mysterious artifacts with engravings of ‘Aliens’ and ‘Spaceships’ unearthed in Mexican Cave

YouTube Video Here: https://www.youtube.com/embed/zSSztZzrAdM?feature=oembed&enablejsapi=1

The mysterious artifacts—depicting strange beings with elongated heads, and oval-shaped eyes, as well as objects that resemble "spaceships" have been unearthed by a group of explorers in a Mexican cave.

A group of explorers has discovered in a Mexican cave evidence what they boast as the best evidence...

The Egyptian Ankh Cross Found In Mexico

Calixtlahuaca, present day Toluca has one of the most mysterious objects discovered in Mexico. The Monument number 4, Cross Altar or Tzompantli share an incredible similarity to the Ankh cross in ancient Egypt.

The ankh also known as key of life was the ancient Egyptian hieroglyphic character that read "life." It actually represents the...

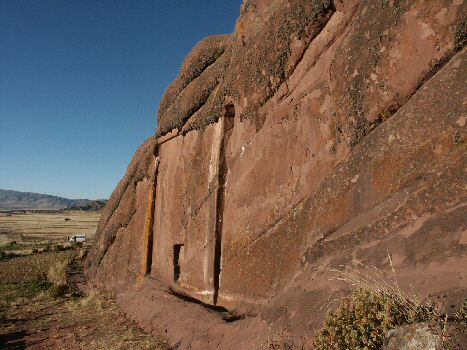

The mysterious “Gate of the Gods” at Hayu Marca, Peru

An ancient legend speaks of a mysterious door which is located in the vicinity of Lake Titicaca. This door, will open one day and welcome the creator gods of all mankind. These gods will return in their "Solar Ships" and all mankind will be in awe.

Strangely such a door seems to exist...

Veteran Pilot Comes Forward to Report Orange Orb UFO in North Carolina

The Carolinas are a hotbed of documented UFO sightings. In fact, the Queen City of Charlotte has been rated among the top 10 large North American cities for UFO sightings. So far, there have been 153 sightings of mysterious lights, discs, and orbs in the sky since 1910.

Last year, we shared...

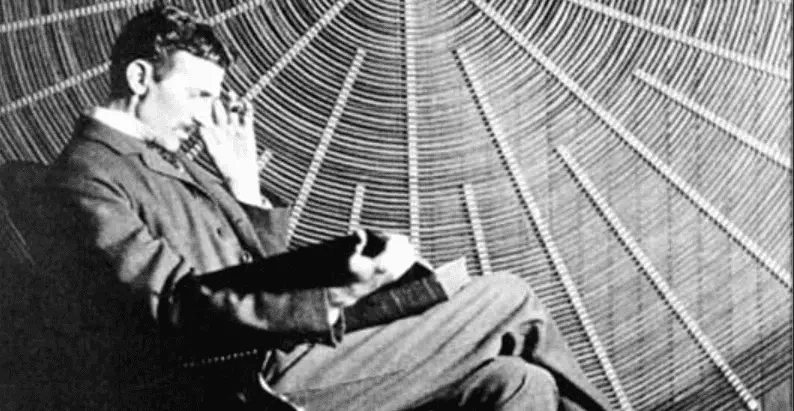

Bizarre: Nikola Tesla Was Brought To Earth From Venus, Says Declassified FBI File

The letter mentions how Nikola Tesla successfully invented a device that could be used for Interplanetary Communication.

It explains that Tesla Engineers built the device after Tesla died, in 1950, and have been, since that time in close touch with 'Space-Ships'.

Furthermore, the document from the FBI mentions how Tesla...

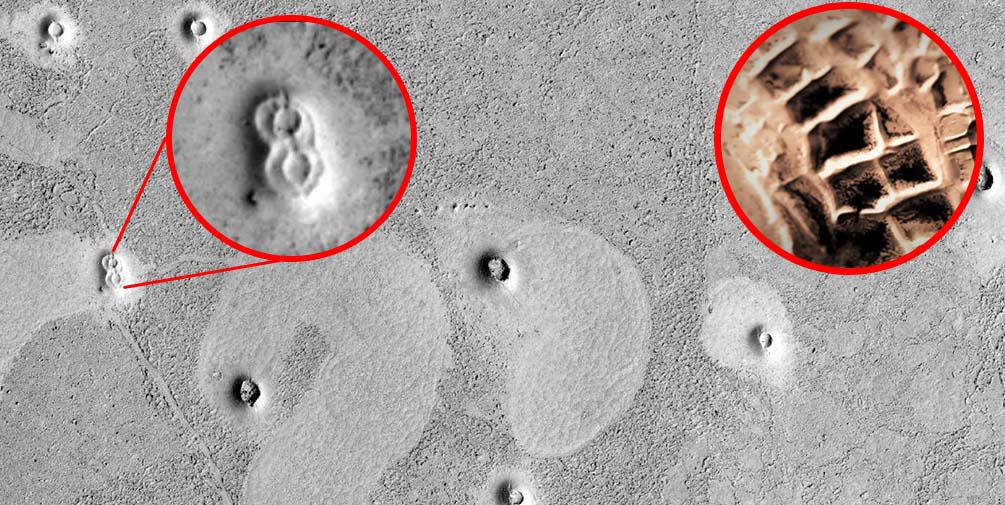

“My battery is low and it’s getting dark”: What one of NASA’s rovers taught...

Mars is one of the planets in our solar system that humans know most about, and for good reason. The "Red Planet" has been lauded by scientists because of its similarity to Earth. The similarity convinces many that humans may be able to fashion a second home out of it....

Trending

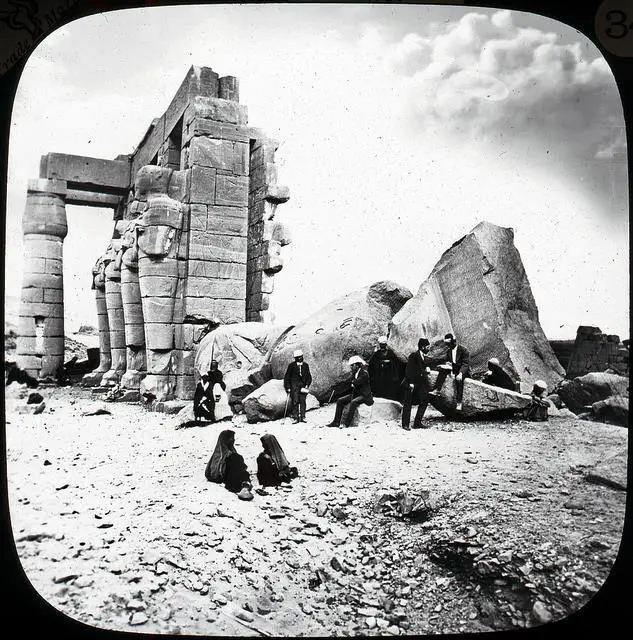

cleopatra

Who was Cleopatra

When we hear the name Cleopatra, we think of a beautiful and alluring woman with a tragic story. But who was she? Cleopatra was the last active pharaoh of Ptolemaic Egypt and briefly survived as pharaoh by her son Caesarion. After her reign, Egypt became a province of the...

How did Cleopatra die?

Cleopatra VII the queen of Egypt and one of the most famous women in history. Cleopatra rules over the prosperous Egyptian empire. She is beautiful, intelligent, and a master of manipulation. Every man she meets falls in love with her, including Julius Caesar and Mark Antony.

Over time, Cleopatra's ambitions...

What did cleopatra look like?

Cleopatra, the Queen of Egypt, was one of the most renowned figures of her time. She was a beautiful, intelligent, and charismatic personality that used her power and influence to shape the course of history. However, her appearance and looks are still a mystery to the world as there...

LATEST ARTICLES

Russian Scientists create breakthrough technology that can transmute any element into another

Russian Scientists have created a BREAKTHROUGH Technology that can transmute any element into another. During a press conference held in Geneva, Switzerland, Russian scientists have announced a technology that would make it possible to transmute any element into another element in the periodic table.

The discovery was produced by two...

Scientists have found a second pyramid hidden within the Pyramid of Chichen Itza

New analysis of the Pyramid of Kukulkan has allowed experts to make a fascinating discovery: There is another Pyramid located withing the Pyramid of Kukulakn at Chichen Itza. Experts say that the structure appeared to have a staircase and perhaps an altar at the top.

Mexican Archaeologists have discovered a...

The Great Pyramid of Giza is located at the exact center of Earth’s landmass

Many people consider the great Pyramid of Giza to be one of the oldest, greatest and most perfect, and scientific 'monuments' on te face of the Earth, created thousands of years ago. However, many people are unaware that the Great Pyramid isnt only an architectural and engineering marvel, it...

No, this is not an actual 250-million-year-old microchip

Short answer NO. While many people love the idea about ancient civilizations existing on Earth millions of years ago, this one simply put IS NOT. Not long ago we had written an article about a curious artifact that was discovered in Russia. Embedded into rock a curious 'device' which according...

3 ancient texts that completely shatter history as we know it

There are numerous 'controversial' ancient texts that have been found throughout the years around the globe. Most of them are firmly rejected by mainstream scholars since they oppose nearly everything set forth by mainstream historians.

Some of these ancient texts are said to shatter mainstream beliefs and dogmas that have...

Apollo Astronaut claims aliens prevented a nuclear war on Earth to ensure our existence

YouTube Video Here: https://www.youtube.com/embed/RhNdxdveK7c?feature=oembed&enablejsapi=1

The Sixth Man to walk on the Moon – Edgar Mitchell made fainting claims about alien life when he stated that the existence of the alien visitors is kept a secret from the public– not due to fear of widespread disbelief– rather, a fear that the...

Declassified CIA documents confirm humans with special abilities can do ‘impossible things.’

YouTube Video Here: https://www.youtube.com/embed/PSCLgGYxJVo?feature=oembed&enablejsapi=1

For centuries researchers around the globe have speculated that certain human beings are able to achieve FASCINATING things that others are not able to do. Now a set of declassified documents prove what many have speculated for decades.

As it turns out, for the last couple of...

Nostradamus predicted Donald Trump as being President of the US

According to interpretations of Quatrain 81 written by Nostradamus, the great prophet foresaw the election of Donald Trump as president of the United States.

Michel de Notre Dame, historically known as Nostradamus, is considered by many as one of the most accurate prophets in history. In his book ‘The Prophecies’,...

The Missing Capstone of the Great Pyramid of Giza

There are numerous mysteries related to the Great Pyramid of Giza, but there is one which researchers are unable to explain: Why is the Great Pyramid –one of the most precise ancient structures on the surface of the planet— missing a capstone on its top? An interesting story is...

Is this evidence that Dragons exist? Man films giant creature resembling a Dragon

YouTube Video Here: https://www.youtube.com/embed/R6vWE8lXVvI?feature=oembed&enablejsapi=1

Get ready people, Dragons are coming. Sort of.

A man in China filmed --ironically-- a giant creature resembling what many believe is a Dragon near the Jade Dragon Snow Mountain. But after researching a bit more we found some very interesting things.

via GIPHY

A video uploaded to social...