Hot Right Now

Five Out-of-Place Artifacts That Challenge Mainstream History

There are perhaps a dozen discoveries that have been made on Earth that challenge conventional and mainstream science in every single way.

Most scholars have decided to simply ignore these findings since they are unable to place them anywhere inside their ‘mainstream history.’ But just what are these discoveries?

Are they...

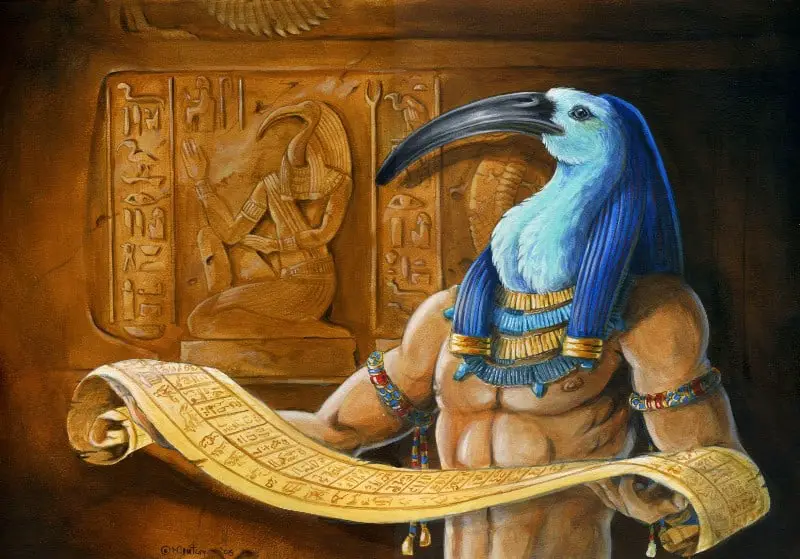

Akhenaten: The Most Mysterious Pharaoh Of Ancient Egypt

Ancient texts describe a time in the history of ancient Egypt known as the Predynastic era, where 'Gods' ruled for hundreds of years over Ancient Egypt, Akhenaten could easily have been an ancient Egyptian Pharaoh that belonged to that period, only out of place and time. Some #AncientAlien theorists interpret...

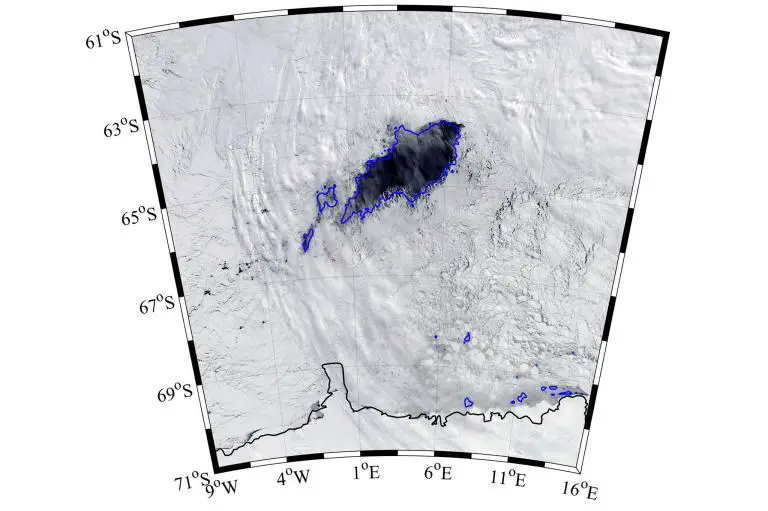

A massive, mysterious Hole has just opened up in Antarctica and experts cant explain...

A massive, mysterious Hole has just opened up on the surface of Antarctica leaving experts confused as they are unable to explain what caused its formation.

The hole—as large as lake superior or the state of Maine, with an approximate area of 30,000 square mile—has created confusion among experts, who...

Ancient Sumer, The Anunnaki, And The Ancient Alien Theory

Sumer is a historical region of the Middle East, the southern part of ancient Mesopotamia, between the alluvial plains of the Euphrates and Tigris rivers.

The Sumerian civilization is considered, according to many authors, as the first and oldest civilization on the surface of the planet, even though there is...

DNA Results For The Elongated Skulls Of Paracas: Part 3 Of 4: “Cleopatra Of...

Meet the “Cleopatra Of Paracas.”

Brien Foerster presents us with further details about the fascinating Paracas skulls from Peru. This time, DNA tests revealed fascinating results performed on a skull that belonged to the Paracas culture. The curious skull was nicknamed Cleopatra because of its unusual shape and obvious traces of red...

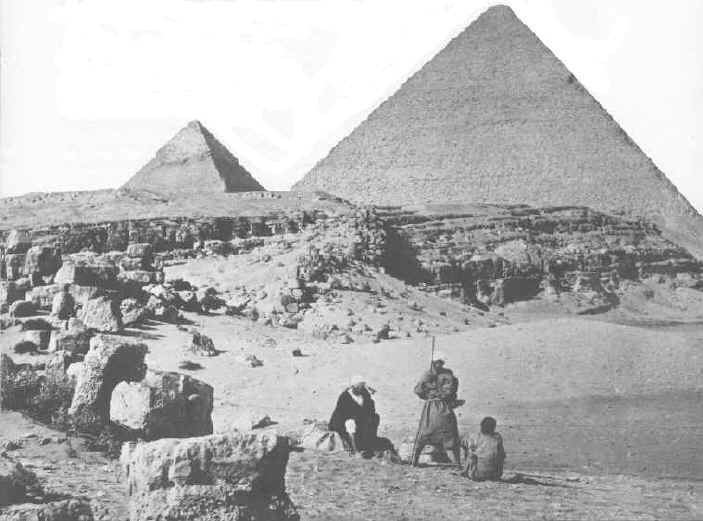

15 extremely rare, ancient images of the Pyramids of Giza you’ve probably never seen

In this article, we take a look at ten of the most impressive, rare and ancient images of the Pyramids at Giza we've managed to find. Behold fifteen breathtaking images of the most incredible ancient structures on the planet like you've never seen them before.

The Pyramids at the Giza plateau...

Trending

cleopatra

How did Cleopatra die?

Cleopatra VII the queen of Egypt and one of the most famous women in history. Cleopatra rules over the prosperous Egyptian empire. She is beautiful, intelligent, and a master of manipulation. Every man she meets falls in love with her, including Julius Caesar and Mark Antony.

Over time, Cleopatra's ambitions...

What did cleopatra look like?

Cleopatra, the Queen of Egypt, was one of the most renowned figures of her time. She was a beautiful, intelligent, and charismatic personality that used her power and influence to shape the course of history. However, her appearance and looks are still a mystery to the world as there...

Who was Cleopatra

When we hear the name Cleopatra, we think of a beautiful and alluring woman with a tragic story. But who was she? Cleopatra was the last active pharaoh of Ptolemaic Egypt and briefly survived as pharaoh by her son Caesarion. After her reign, Egypt became a province of the...

LATEST ARTICLES

Nikola Tesla and numbers 3, 6 and 9: The secret key to free energy?

If you only knew the magnificence of the 3, 6 and 9, then you would have a key to the universe. - Nikola Tesla

While many people draw a connection between Tesla and electricity, the truth is that Tesla’s inventions went far beyond it. In fact, he made groundbreaking discoveries...

What on Earth are the mysterious pyramids of Antarctica?

YouTube Video Here: https://www.youtube.com/embed/PpdMElZMscU?feature=oembed&enablejsapi=1

The 'Pyramids' of Antarctica are one of the most controversial topics on the internet.

Some label them as conspiracy theories while others believe they are just small pieces of the many structures found hidden beneath Antarctica.

Saying that there was a civilization that developed on Antarctica...

4 Thought-provoking documentaries suggest ancient sites on Earth are connected

YouTube Video Here: https://www.youtube.com/embed/oyOA2KuN7yU?feature=oembed&enablejsapi=1

What are the odds that countless ancient sites around the globe are connected randomly? What if, a mind-boggling pattern lays hidden within these structures?

The first thing that comes to my mind is, how could have ancient cultures placed numerous ancient sites with such precision? Why did...

Mind-boggling documentary shows connection between ancient sites and the Stars

Thre is a global phenomenon that has existed –ignored by many— for thousands of years on Earth. An alignment between ancient sites built thousands –if not tens of thousands— of years ago and the stars tells us how sophisticated ancient cultures actually were. It seems that there is a forgotten...

Russian Scientists create breakthrough technology that can transmute any element into another

Russian Scientists have created a BREAKTHROUGH Technology that can transmute any element into another. During a press conference held in Geneva, Switzerland, Russian scientists have announced a technology that would make it possible to transmute any element into another element in the periodic table.

The discovery was produced by two...

Scientists have found a second pyramid hidden within the Pyramid of Chichen Itza

New analysis of the Pyramid of Kukulkan has allowed experts to make a fascinating discovery: There is another Pyramid located withing the Pyramid of Kukulakn at Chichen Itza. Experts say that the structure appeared to have a staircase and perhaps an altar at the top.

Mexican Archaeologists have discovered a...

The Great Pyramid of Giza is located at the exact center of Earth’s landmass

Many people consider the great Pyramid of Giza to be one of the oldest, greatest and most perfect, and scientific 'monuments' on te face of the Earth, created thousands of years ago. However, many people are unaware that the Great Pyramid isnt only an architectural and engineering marvel, it...

No, this is not an actual 250-million-year-old microchip

Short answer NO. While many people love the idea about ancient civilizations existing on Earth millions of years ago, this one simply put IS NOT. Not long ago we had written an article about a curious artifact that was discovered in Russia. Embedded into rock a curious 'device' which according...

3 ancient texts that completely shatter history as we know it

There are numerous 'controversial' ancient texts that have been found throughout the years around the globe. Most of them are firmly rejected by mainstream scholars since they oppose nearly everything set forth by mainstream historians.

Some of these ancient texts are said to shatter mainstream beliefs and dogmas that have...

Apollo Astronaut claims aliens prevented a nuclear war on Earth to ensure our existence

YouTube Video Here: https://www.youtube.com/embed/RhNdxdveK7c?feature=oembed&enablejsapi=1

The Sixth Man to walk on the Moon – Edgar Mitchell made fainting claims about alien life when he stated that the existence of the alien visitors is kept a secret from the public– not due to fear of widespread disbelief– rather, a fear that the...