Hot Right Now

7,000-year-old ‘Reptilian’ statues discovered in Mesopotamia

What did ancient mankind try to depict with the 7,000-year-old Reptilian statues? Did these enigmatic beings really exist on Earth? Or are they the product of ancient abstract art? The truth behind the reptilian-like figurines is fascinating and has left scholars in awe, ever since their discovery nearly a...

We Are Surrounded by Masonic Symbols – How Modern Logos Are Linked To Secret...

In the modern world, we are surrounded by countless logos, symbols, and graphs that make up our everyday life.

No matter where we look, willingly or unwillingly, we find ourselves immersed in a deep layer of information that is in turn filled with secret symbols and eerie meanings.

And while ancient societies...

The real World War I: 13,000 years ago

History had it all working apparently... the first world war happened thousands of years ago according to researchers who have found evidence of the first major military conflict at Jebel Sahaba.At a time when we were commemorating the (barely) one hundred years of the so-called First World War, researchers have...

Massive Easter Island Statues have bodies buried below the surface

As it turns out, the famous MOAI statues –dubbed by some the ancient TITANS of Rapa Nui— are not just massive heads. These enigmatic statues are complete and have massive bodies buried under the surface.

One of the greatest archaeological mysteries are without a doubt the mysterious MOAI statues located...

“Man-made metal” found embedded inside ancient Geode has experts stumped

Depending on the size of the geode—the largest crystals can take a million years to grow. So how on Earth is there a metallic—apparently man-made—object embedded in one?

Throughout the years, researchers, archaeologists and ordinary people have come across a wealth of documented artifacts that have been ‘recovered’ from very ancient sediment,...

50 Images of Ancient Megaliths And Perfectly Shaped Stones That Defy Logic

It is not a mystery that thousands of years ago, ancient cultures around the globe had the ability to somehow move supermassive blocks of stone with extreme facility.

(function(d, s, id) {

if (d.getElementById(id)) return;

var js = d.createElement(s); js.id = id;

js.src = '//cdn4.wibbitz.com/static.js';

d.getElementsByTagName('body').appendChild(js);

}(document, 'script', 'wibbitz-static-embed'));

Despite lacking 'modern tools' to do so,...

Trending

cleopatra

What did cleopatra look like?

Cleopatra, the Queen of Egypt, was one of the most renowned figures of her time. She was a beautiful, intelligent, and charismatic personality that used her power and influence to shape the course of history. However, her appearance and looks are still a mystery to the world as there...

How did Cleopatra die?

Cleopatra VII the queen of Egypt and one of the most famous women in history. Cleopatra rules over the prosperous Egyptian empire. She is beautiful, intelligent, and a master of manipulation. Every man she meets falls in love with her, including Julius Caesar and Mark Antony.

Over time, Cleopatra's ambitions...

Who was Cleopatra

When we hear the name Cleopatra, we think of a beautiful and alluring woman with a tragic story. But who was she? Cleopatra was the last active pharaoh of Ptolemaic Egypt and briefly survived as pharaoh by her son Caesarion. After her reign, Egypt became a province of the...

News

LATEST ARTICLES

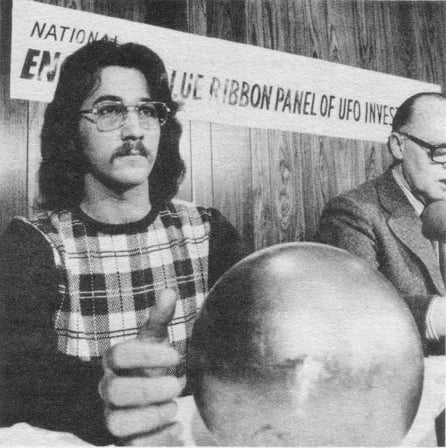

Decades after its discovery, the Betz Sphere remains a scientific mystery

The so-called 'Alien sphere' was discovered in 1974. After several tests, experts concluded that the object was a magnetic sphere sensitive to magnetic fields, numerous sound emissions, and mechanical stimulation. The sphere was able to withstand a pressure of 120,000 pounds per square inch, concluding that it was composed out...

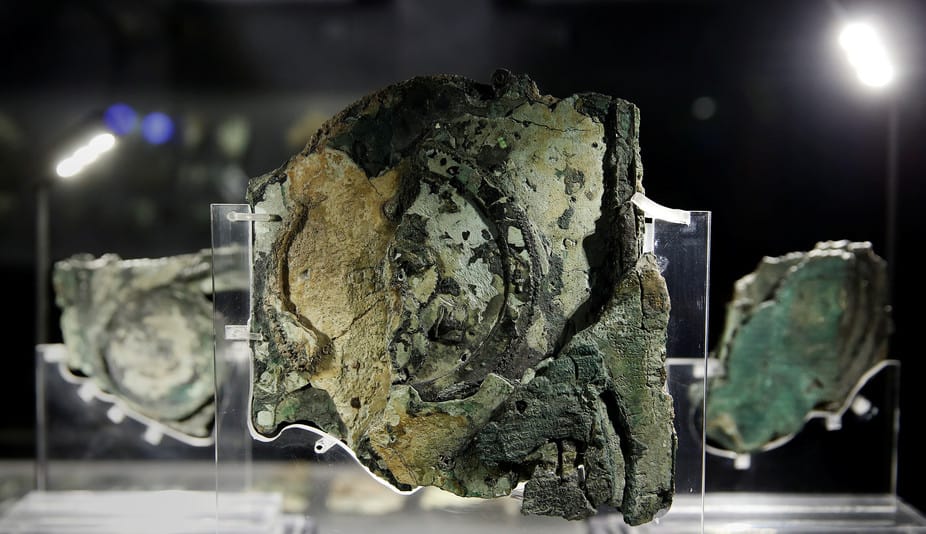

10 fascinating facts about the Antikythera Mechanism: A ‘computer’ created over 2000 years ago

The Antikythera mechanism is considered the FIRST analog computer in history. Researchers have still no idea who built it, what it was exactly used for, when it was built and where.

Discovered in 1900 among the remains of a shipwreck, and regarded as the first computer made by humans, the Antikythera mechanism remained...

Eery coincidence? The constant of speed of light equals the coordinates of the Great...

Is it just an eerie coincidence that the speed of Light equals the coordinates of the Great Pyramid of Giza? The speed of light in a vacuum is 299, 792, 458 meters per second, and the geographic coordinate for the Great Pyramid of Giza is 29.9792458°N. Some say this is...

5 Mind-boggling mysteries about the Universe that science cannot explain

Why is it that the universe is expanding faster and faster? Are there other universes out there? What if black holes are in fact doorways to other worlds? Why is the universe filled with invisible matter? And why is gravity –one of the weaker fundamental forces— the only force...

The Great Pyramid of Giza consists of a staggering 2.3 million blocks

Not only does the Great Pyramid of Giza consist of around 2.3 million blocks transported from quarries located all over Egypt, it is estimated that 5.5 million tons of limestone, 8000 tons of granite and around 500,000 tons of mortar were used thousands of years ago to build this...

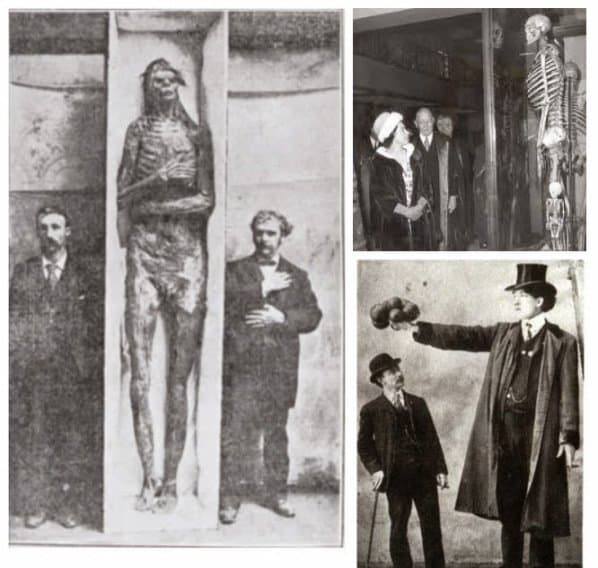

The mystery behind the 18 Giant skeletons found in Wisconsin

Their heights ranged between 7.6ft and 10 feet and their skulls “presumably those of men, are much larger than the heads of any race which inhabit America to-day.” They tend to have a double row of teeth, 6 fingers, 6 toes and like humans came in different races. The...

Tesla’s Time Travel Experiment: I could see the past, present and future all at...

Apparently, Tesla too was obsessed with time travel. He worked on a time machine and reportedly succeeded, saying: ‘I could see the past, present, and future all at the same time.'

The idea that humans are able to travel in time has captured the imagination of millions around the globe....

The astonishing link between Sacred Geometry and the Universe

Have you ever thought about ‘Sacred Geometry’ and the mysterious connection to everything that surrounds us? As it turns out there are numerous mysteries that seem to connect mother nature and sacred geometry.

Sacred geometry proposes that it is possible to find in the universe, certain geometric patterns which are...

Do these NASA images reveal traces of ‘walled cities’ on Mars?

YouTube Video Here: https://www.youtube.com/embed/Uf7dA71IAhc?feature=oembed&enablejsapi=1

According to UFO Hunters, there is conclusive evidence that an ancient civilization may have existed on the surface of Mars in the distant past. AS proof, ufo hunters point towards 'shocking' images taken on Mars showing what appears numerous structures and walled cities.

Located near Elysium Planitia...

Another massive ‘alien’ base found on the moon?

YouTube Video Here: https://www.youtube.com/embed/WhfupcX8GPU?feature=oembed&enablejsapi=1

NASA's Lunar Reconnaissance Orbiter (LRO) was able to capture another strange object on the surface of Earth's natural satellite. According to UFO hunters, it yet another anomalous structure which defies explanation.

According to researchers, the objects visible in the images are of a peculiar and striking geometry,...